Vector Index

Overview

A vector index is designed to efficiently store and manage high-dimensional vectors (or embeddings), enabling fast retrieval of similar vectors based on a chosen similarity metric. Instead of performing an exhaustive search across all stored vectors, the vector index significantly reduces the search space, making nearest-neighbor retrieval more efficient.

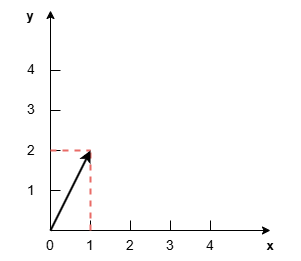

Vectors and Embeddings

Vectors can be viewed as an ordered list of numbers. For example, the vector [1, 2] represents a direction from the origin to the point (1, 2) in a two-dimensional space, and the distance (or magnitude) to that point.

In the context of machine learning and natural language processing (NLP), the term embedding is commonly used when referring vectors. An embedding is a high-dimensional vector that represents data, often with hundreds of dimensions.

Creating Embeddings

You can create embeddings for both structured data (e.g., text) and unstructured data (e.g., images, graphs) using various models. Some popular models include:

- OpenAI: A collection of models to turn text into embeddings.

- Node2Vec: A model that generates embeddings for graph nodes based on their relationships.

- ResNet (Residual Networks): A series of models for generating image embeddings.

Why Embeddings

Embeddings are often generated by considering the context of the data entity rather than just the data itself. This is especially true for tasks like NLP, where the meaning of a word is influenced by its surrounding context.

Consider the word "bank", which has multiple meanings depending on the context:

- Bank as a financial institution: "I went to the bank to deposit some money."

- Bank as the side of a river: "We sat on the bank of the river to watch the sunset."

This contextual information makes embeddings much more powerful for downstream tasks like semantic search, recommendation and classification.

Loading Embeddings into Ultipa

After creating embeddings for entities, they can be imported or stored into Ultipa as properties of type list<float> or list<double>. By creating vector indexes for these properties, you can perform vector searches.

Vector Search

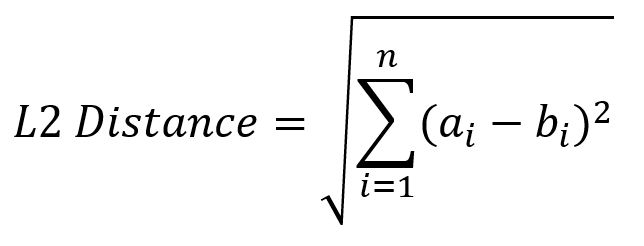

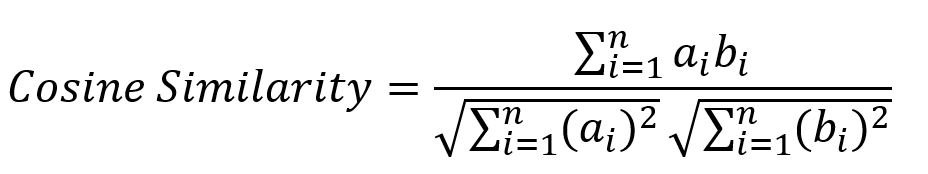

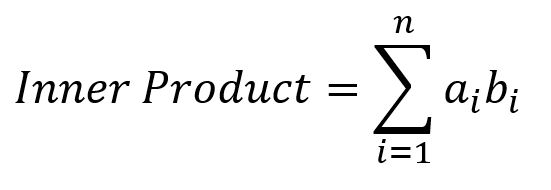

Vector search refers to the process of finding vectors that are most similar to a given query vector, using a similarity measure. Ultipa supports the following similarity measures:

- L2 Distance (Euclidean Distance): Calculates the straight-line distance between two points in a high-dimensional space. This measure is sensitive to the magnitude of the vectors.

- Cosine Similarity: Measures the cosine of the angle between two vectors, indicating their orientation in space. It is independent of vector magnitude.

- Inner Product: Computes the dot product between two vectors, often used when the magnitude of the vectors is important.

Example Graph

The example graph consists of 10 Book nodes, each containing the properties name, author, summary, and summaryEmbedding. The summaryEmbedding holds the 384-dimensional text embeddings of the summary, generated using the all-MiniLM-L6-v2 model from Hugging Face.

CREATE GRAPH myGraph { NODE Book ({title string, author string, summary text, summaryEmbedding list<double>}) } PARTITION BY HASH(Crc32) SHARDS [1]

Showing Vector Indexes

To retrieve node vector indexes in the current graph:

GQLSHOW NODE VECTOR INDEX

The information about vector indexes is organized into a _nodeVectorIndex table with the following fields:

Field | Description |

|---|---|

name | Vector index name. |

schema | The schema of the vector index. |

properties | The property of the vector index. |

vector_server_name | The vector server that hosts the vector index. |

status | Vector index status, which can be DONE or CREATING. |

config | Vector index configuration, including similarity_function, index_type, and dimensions. |

Creating a Vector Index

You can create a vector index using the CREATE VECTOR INDEX statement for a node property of the list<float> or list<double> type. The vector index creation runs as a job, you may run SHOW JOB <id?> afterward to verify the success of the creation.

To create a vector index named summary_embedding for the property summaryEmbedding of Book nodes:

GQLCREATE VECTOR INDEX "summary_embedding" ON NODE Book (summaryEmbedding) OPTIONS { similarity_function: "COSINE", index_type: "FLAT", dimensions: 384, vector_server: "vector_server_1" }

To create a vector index for all node labels:

GQLCREATE VECTOR INDEX "all_embeddings" ON NODE * (embedding) OPTIONS { similarity_function: "COSINE", index_type: "HNSW", dimensions: 1536 }

Details

- The vector index name must be unique. Naming conventions are:

- 2 to 64 characters.

- Begins with a letter.

- Allowed characters: letters (A-Z, a-z), numbers (0-9) and underscores (

_).

- A vector index is applied to a single label and a single property. Use

*to apply to all labels. - Configurations for a vector index:

Item | Type | Default | Description |

|---|---|---|---|

similarity_function | String | L2 | The similarity function used to assess the similarity of two vectors. Supports L2, COSINE, and IP. Learn more |

index_type | String | FLAT | The method used to organize and search the vectors in the index. Supports FLAT, IVF_FLAT, IVF_SQ8, HNSW, HNSW_SQ, HNSW_PQ, HNSW_PRQ, and SCANN. Learn more |

dimensions | Integer | 128 | The dimensions of the vectors to be indexed. Only vectors of the configured dimension are indexed, and querying the index with a vector of a different dimensions will return an error. |

m | Integer | 16 | HNSW parameter: Maximum number of connections per node. Higher values improve recall but increase memory and build time. |

efConstruction | Integer | 200 | HNSW parameter: Size of dynamic candidate list during index construction. Higher values improve quality but increase build time. |

vector_server | String | / | The name of the vector server that hosts the vector index. |

Dropping a Vector Index

You can drop a vector index using the DROP VECTOR INDEX statement. Dropping a vector index does not affect the actual property values stored in shards.

NOTEA property with a vector index cannot be dropped until the vector index is deleted.

To drop the node vector index summary_embedding:

GQLDROP NODE VECTOR INDEX summary_embedding

Use IF EXISTS to avoid errors when dropping a non-existent index:

GQLDROP NODE VECTOR INDEX IF EXISTS summary_embedding

Using Vector Indexes

You can use a vector index for vector search by calling the vector.queryNodes() procedure.

Syntax

SyntaxCALL vector.queryNodes("<vectorIndexName>", <numMostSimNodes>, <targetVector>)

Parameters

Params | Description |

|---|---|

vectorIndexName | The name of the vector index to be used. |

<numMostSimNodes> | Number of the nodes to retrieve that have the most similar vectors to <targetVector>. Note that the <targetVector> itself is included. |

<targetVector> | The target vector. |

Returns

_uuid:_uuidof the retrieved node.score: The similarity score between the vector of retrieved node and the target vector.

Example

Finds the top two books most similar to Pride and Prejudice by the vector index summary_embedding, return the book names along with the similarity scores between them:

GQLMATCH (target:Book {title: "Pride and Prejudice"}) CALL vector.queryNodes('summary_embedding', 3, target.summaryEmbedding) YIELD result MATCH (book WHERE book._uuid = result._uuid) RETURN table(book.title, result.score)

Result:

| book.title | result.score |

|---|---|

| Pride and Prejudice | 1 |

| One Hundred Years of Solitude | 0.39629873633384705 |

| The Great Gatsby | 0.3709701597690582 |

Annex: Index Types

Index Type | Description |

|---|---|

FLAT | A brute-force method where all vectors are stored and compared directly. This method ensures accurate results, but it is computationally expensive and inefficient when dealing with large datasets. |

IVF_FLAT | The IVF (Inverted File) method partitions the vectors into cluster units, and the search only takes place within the most relevant units. IVF_FLAT uses a FLAT approach within each unit, providing faster searches with a slight compromise on accuracy. |

IVF_SQ8 | SQ8 (Scalar Quantization with 8-bit) uses scalar quantization to compress vectors in each unit into an 8-bit representation. It reduces storage requirements and increases search speed at the cost of slightly lower precision. |

HNSW | HNSW (Hierarchical Navigable Small World) is a graph-based indexing method that organizes vectors into a graph structure for fast approximate nearest neighbor search. It is highly efficient, especially for large datasets, and tends to outperform other methods in terms of search speed and recall. |

HNSW_SQ | It combines the HNSW method with SQ (Scalar Quantization) to reduce memory usage while maintaining search efficiency. |

HNSW_PQ | It uses PQ (Product Quantization) to enhance the HNSW method by compressing the vectors, resulting in a more memory-efficient structure with good performance. |

HNSW_PRQ | It combines the benefits of HNSW_PQ with a re-ranking mechanism to improve accuracy after an initial fast search. This method ensures both speed and high precision. |

SCANN | SCANN (Scalable Nearest Neighbor) is an advanced method developed by Google for efficient nearest neighbor search. It utilizes techniques like quantization and partitioning to provide extremely fast retrieval speeds, especially on very large datasets. |