The Skip-gram (SG) model is a prominent approach designed for creating word embeddings in the field of natural language processing (NLP). It has also been used in graph embedding algorithms such as Node2Vec and Struc2Vec to generate node embeddings.

Background

The origin of the Skip-gram model can be traced back to the Word2Vec algorithm. Word2Vec maps words to a vector space, where semantically similar words are represented by vectors that are close in proximity. Google's T. Mikolov et al. introduced Word2Vec in 2013.

- T. Mikolov, K. Chen, G. Corrado, J. Dean, Efficient Estimation of Word Representations in Vector Space (2013)

- X. Rong, word2vec Parameter Learning Explained (2016)

In the realm of graph embedding, the inception of DeepWalk in 2014 marked the application of the Skip-gram model to generate vector representations of nodes within graphs. The core concept is treating nodes as "words", the node sequences generated by random walks as "sentences" and "corpus".

- B. Perozzi, R. Al-Rfou, S. Skiena, DeepWalk: Online Learning of Social Representations (2014)

Subsequent graph embedding methods such as Node2Vec and Struc2Vec have enhanced the DeepWalk approach while still leveraging the Skip-gram framework.

We will illustrate the Skip-gram model using the context of its original application in natural language processing.

Model Overview

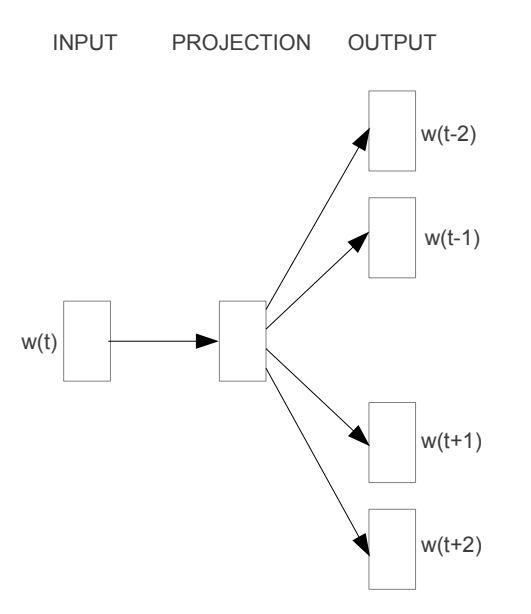

The fundamental principle underlying Skip-gram is to predict a set of context words for a given target word. As depicted in the diagram below, the input word, denoted as , is fed into the model; the model then generates four context words related to : , , , and . Here, the symbols / signify the preceding and succeeding context in relation to the target word. The number of context words produced can be adjusted as needed.

However, it's important to recognize that the ultimate objective of the Skip-gram model is not prediction. Instead, its real task to derive the weight matrix found within the mapping relationship (indicated as PROJECTION in the diagram), which effectively represents the vectorized representations of words.

Corpus

A corpus is a collection of sentences or texts that a model utilizes to learn the semantic relationships between words.

Consider a vocabulary containing 10 distinct words drawn from a corpus: graph, is, a, good, way, to, visualize, data, very, at.

These words can be constructed into sentences such as:

Graph is a good way to visualize data.

Sliding Window Sampling

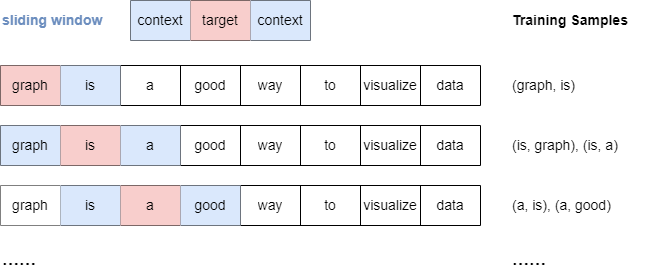

The Skip-gram model employs the sliding window sampling technique to generate training samples. This method uses a "window" that moves sequentially through each word in the sentence. The target word is combined with every context word before and after it, within a certain range window_size.

Below is an illustration of the sampling process when window_size.

It's important to note that when window_size, all context words falling within the specified window are treated equally, regardless of their distance from the target word.

One-hot Encoding

Since words are not directly interpretable by models, they must be converted into machine-understandable representations.

One common method for encoding words is the one-hot encoding. In this approach, each word is represented as a unique binary vector where only one element is "hot" () while all others are "cold" (). The position of the in the vector corresponds to the index of the word in the vocabulary.

Here is how one-hot encoding is applied to our vocabulary:

| Word | One-hot Encoded Vector |

|---|---|

| graph | |

| is | |

| a | |

| good | |

| way | |

| to | |

| visualize | |

| data | |

| very |

Skip-gram Architecture

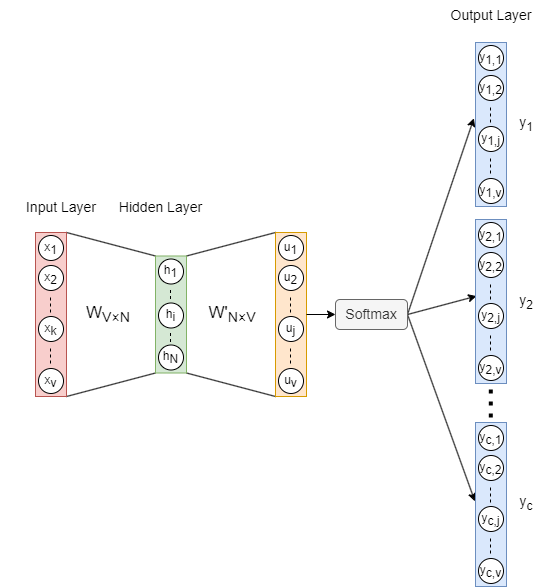

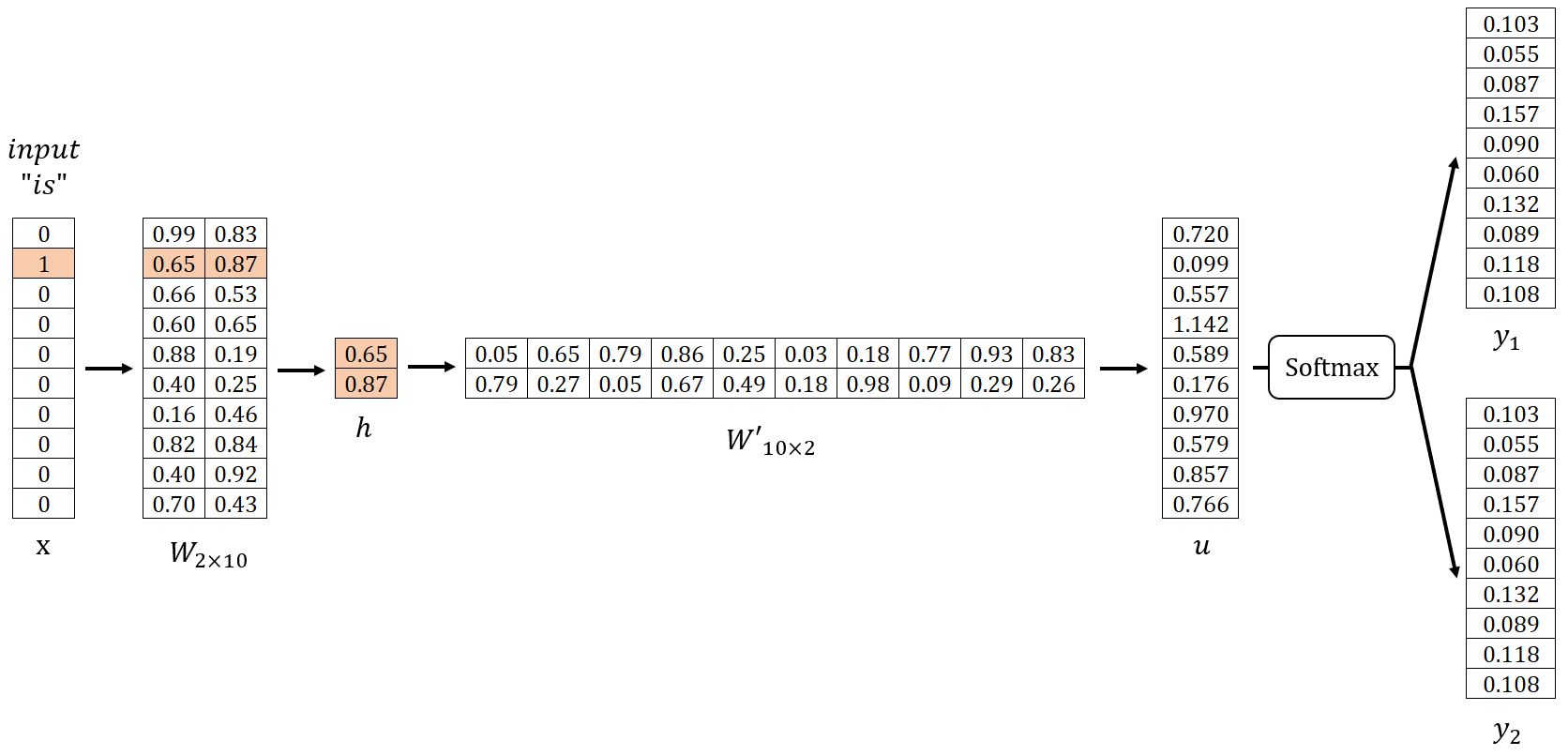

The architecture of the Skip-gram model is depicted as above, where:

- The input vector is the one-hot encoding of the target word, and is the number of words in the vocabulary.

- is the input→hidden weight matrix, and is the dimension of word embeddings.

- is the hidden layer vector.

- is the hidden→output weight matrix. and are different, is not the transpose of .

- is the vector before applying the activation function Softmax.

- The output vectors () are also referred to as panels, panels correspond to context words of the target word.

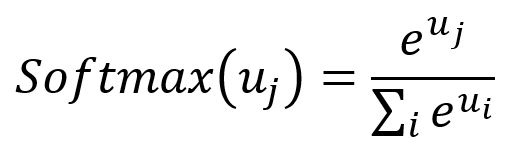

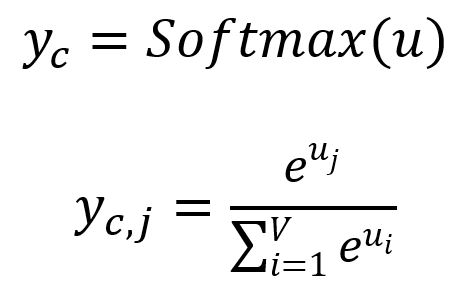

Softmax: Softmax function as an activation function, serving to normalize a numerical vector into a probability distribution vector. In this transformed vector, the summation of all probabilities equals . The formula for Softmax is as follows:

Forward Propagation

In our example, , and set . Let's begin by randomly initializing the weight matrices and as shown below. We will then use the sample (is, graph), (is, a) for demonstration.

Input Layer → Hidden Layer

Get the hidden layer vector by:

Given is a one-hot encoded vector with only , corresponds to the -th row of matrix . This operation is essentially a simple lookup process:

where is the input vector of the target word.

In fact, each row of matrix , denoted as , is viewed as the final embedding of each word in the vocabulary.

Hidden Layer → Output Layer

Get the vector by:

The -th component of vector equals to the dot product of vector and the transpose of the -th column vector of matrix :

where is the output vector of the -th word in the vocabulary.

In the design of the Skip-gram model, each word within the vocabulary has two distinct representations: the input vector and the output vector . The input vector serves as the representation when the word is used as the target, while the output vector represents the word when it acts as the context.

During computation, is essentially the dot product of the input vector of the target word and the output vector of the -th word . The Skip-gram model is also designed with the principle that greater similarity between two vectors yields a larger dot product of those vectors.

Besides, it is important to highlight that only the input vectors are ultimately utilized as the word embeddings. This separation of input and output vectors simplifies the computation process, enhancing both efficiency and accuracy in the model's training and inference.

Get each output panel by:

where is the -th component of , representing the probability of the -th word within the vocabulary being predicted while considering the given target word. Apparently, the sum of all probabilities is .

The words with the highest probabilities are considered as the predicted context words. In our example, and the predicted context words are good and visualize.

Backpropagation

To adjust the weights in and , SGD is used to backpropagate the errors.

Loss Function

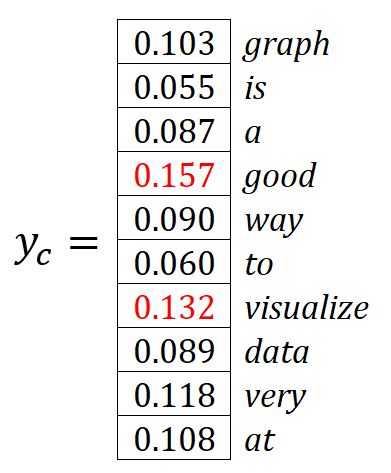

We want to maximize the probabilities of the context words, i.e., to maximize the product of these probabilities:

where is the index of the expected -th output context word.

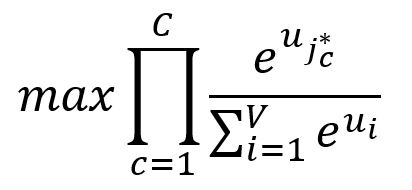

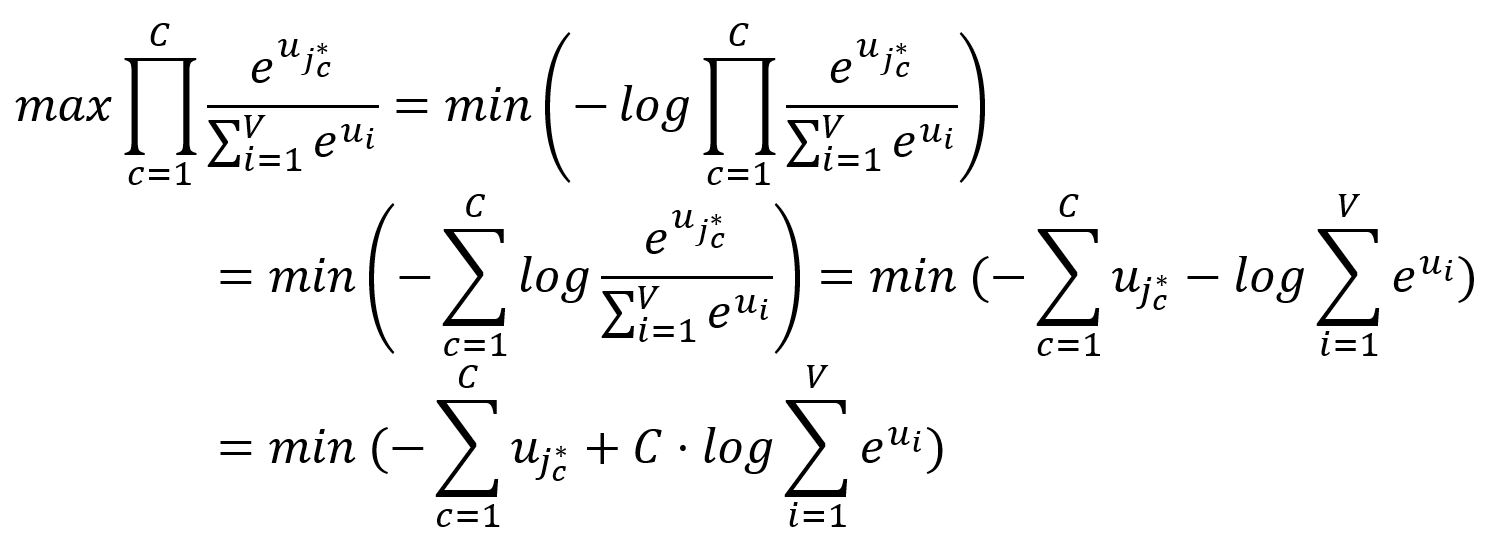

Since minimization is often considered more straightforward and practical than maximization, we perform some transformations to the above goal:

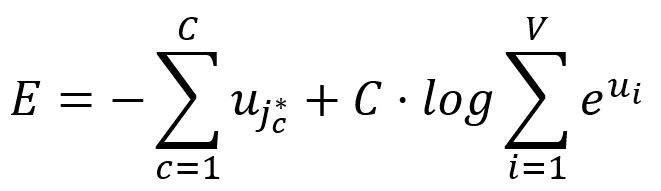

Therefore, the loss function of Skip-gram is expressed as:

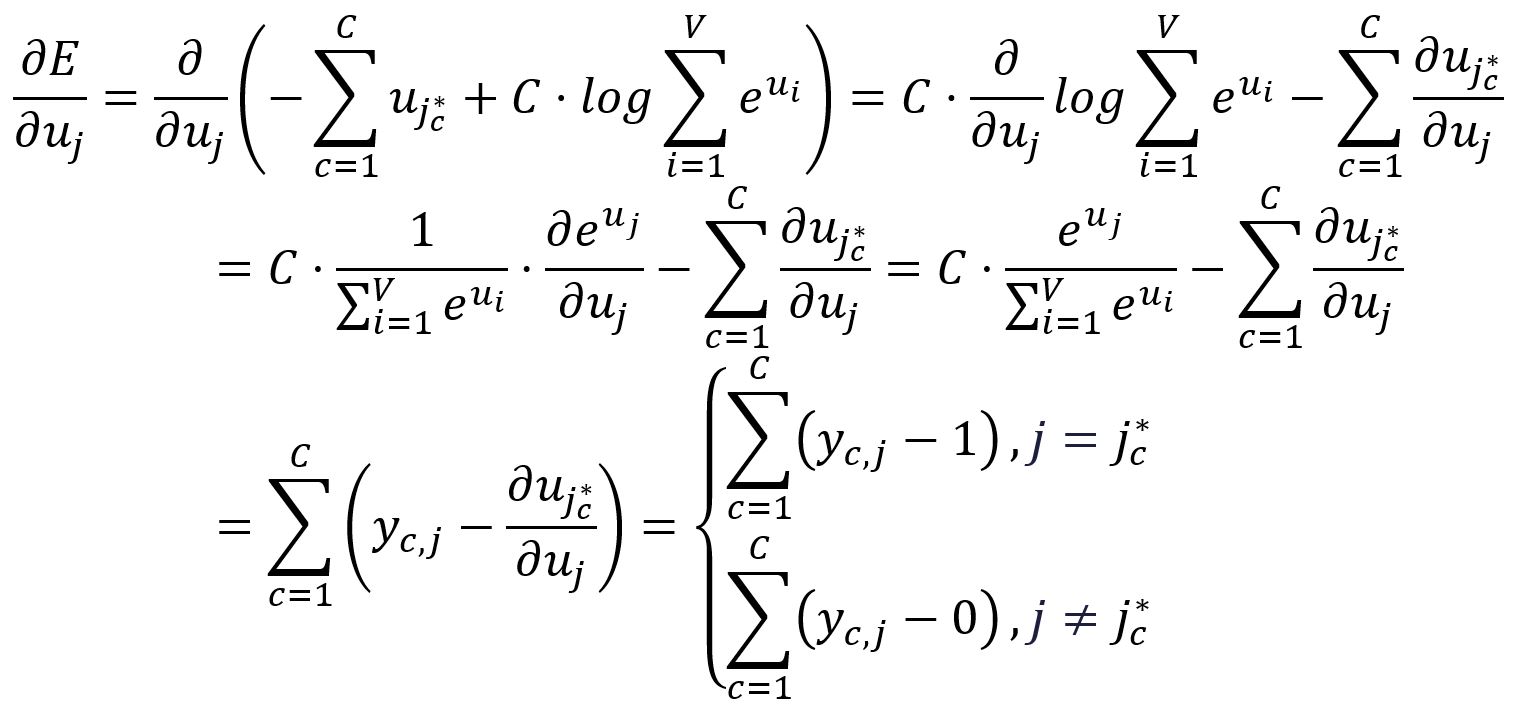

Take the partial derivative of with respect to :

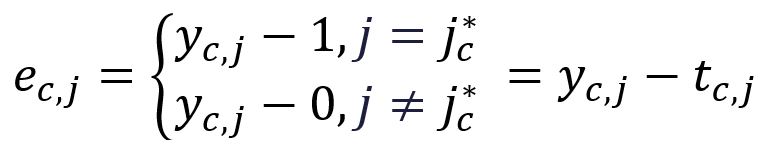

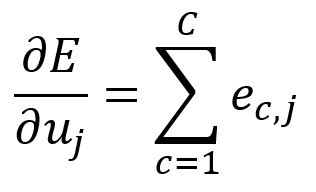

To simply the notaion going forward, define the following:

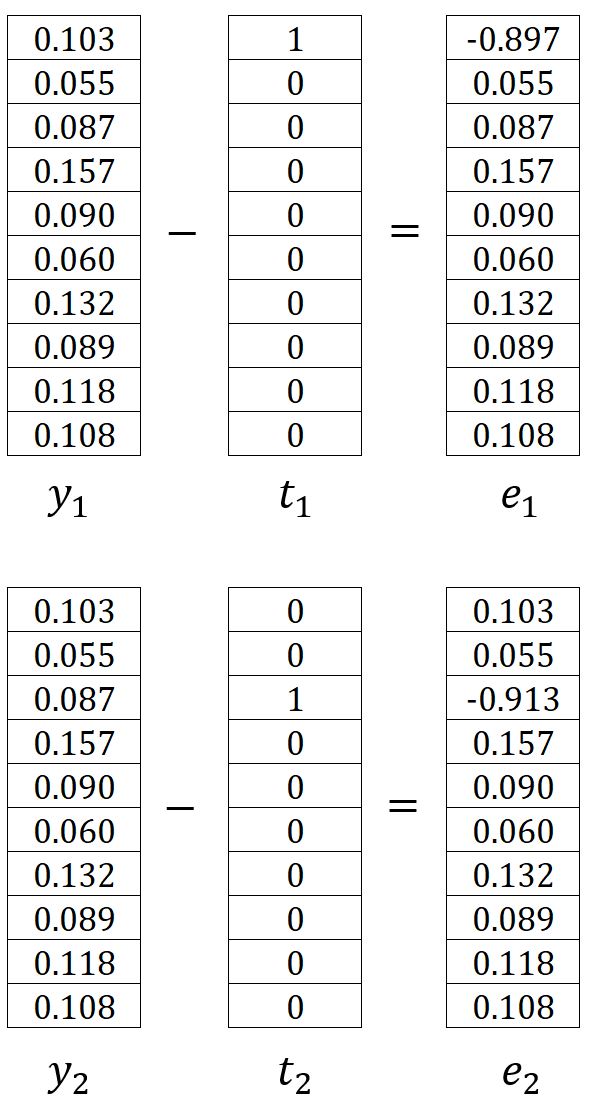

where is the one-hot encoding vector of the -th expected output context word. In our example, and are the one-hot encoded vectors of words graph and a, thus and are calculated as:

Therefore, can be written as:

In our example, it is calculated as:

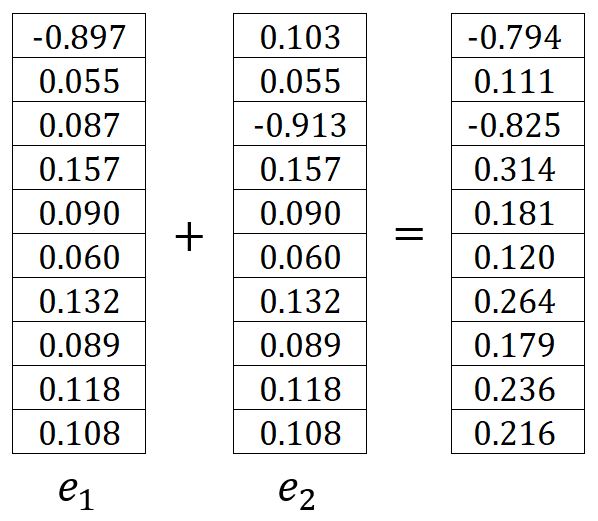

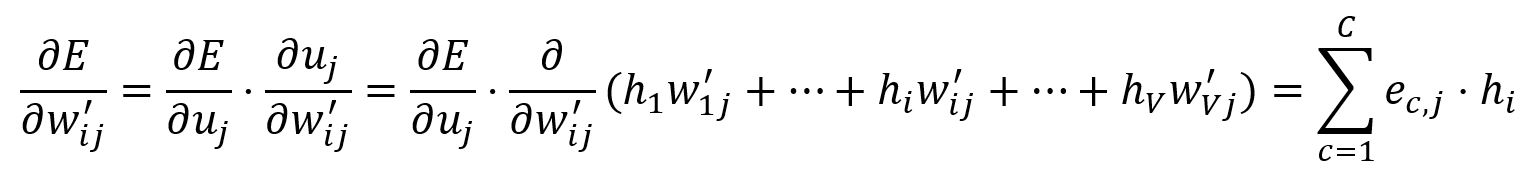

Output Layer → Hidden Layer

Adjustments are made to all weights in matrix , which means that all the output vectors of words are updated.

Calculate the partial derivative of with respect to :

Adjust according to the learning rate :

Set . For instance, and are updated to:

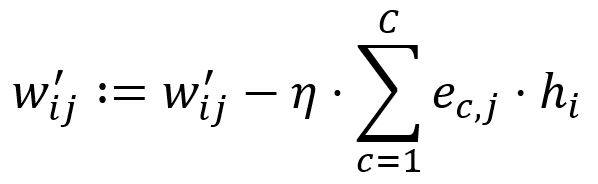

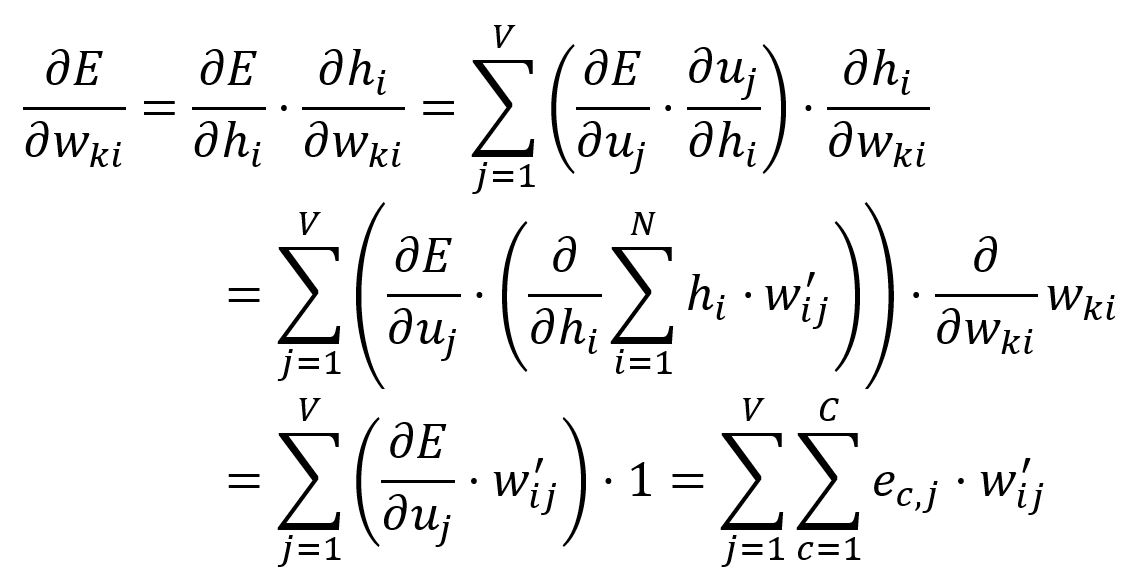

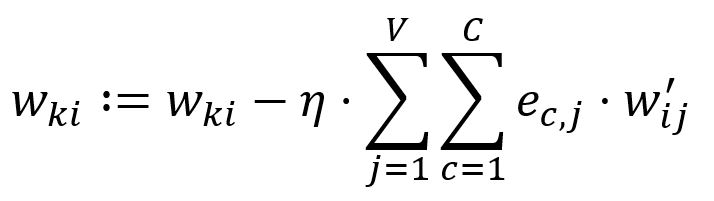

Hidden Layer → Input Layer

Adjustments are made only to the weights in matrix that correspond to the input vector of the target word.

The vector is obtained by only looking up the -th row of matrix (given that ):

Calculate the partial derivative of with respect to :

Adjust according to the learning rate :

In our example, , hence, and are updated:

Optimization

We have explored the fundamentals of the Skip-gram model. Nevertheless, incorporating optimizations is imperative to ensure the model's computational complexity remains viable and pragmatic for real-world applications. Click here to continue reading.