Overview

LINE (Large-scale Information Network Embedding) is a network embedding model that preserves the local or global network structures. LINE is able to scale to very large, arbitrary types of networks, it was originally proposed in 2015:

- J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, Q. Zhu, LINE: Large-scale Information Network Embedding (2015)

Concepts

First-order Proximity and Second-order Proximity

First-order proximity in a network shows the local proximity between two nodes, and it is contingent upon connectivity. The existence of a link or a link with a larger weight (negative weight is not considered here) signifies greater first-order proximity among two nodes; if no link exist in-between, their first-order proximity is 0.

On the other hand, second-order proximity between a pair of nodes is the similarity between their neighborhood structure, which is determined through the shared neighbors. In case two nodes lack common neighbors, their second-order proximity is 0.

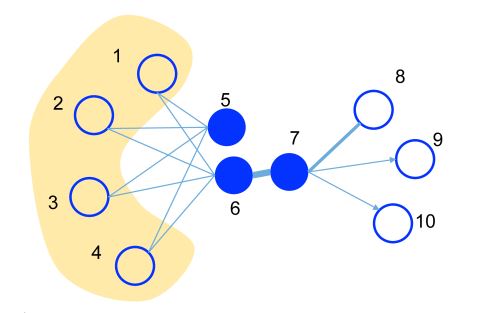

This is an illustrative example, where the edge thickness signifies its weight.

- A substantial weight on the edge between nodes 6 and 7 indicates a high first-order proximity. They shall have close representations in the embedding space.

- Though nodes 5 and 6 are not directly connected, their considerable common neighbors establish a notable second-order proximity. They are expected to be represented closely to each other in the embedding space as well.

LINE Model

The LINE model is designed to embed nodes in graph G = (V,E) into low-dimensional vectors, preserving the first- or second-order proximity between nodes.

LINE with First-order Proximity

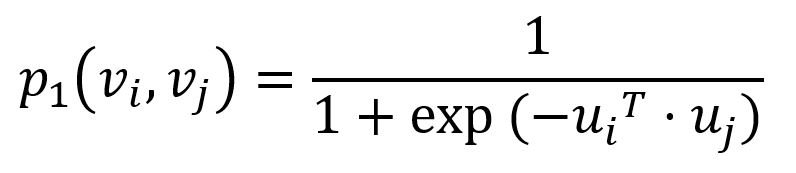

To capture the first-order proximity, LINE defines the joint probability for each edge (i,j)∈E connecting nodes vi and vj as follows:

where ui is the low-dimensional vector representation of node vi. The joint probability p1 ranges from 0 to 1, with two closer vectors resulting in a higher dot product and, consequently a higher joint probability.

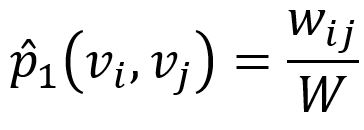

Empirically, the joint probability between node vi and vj can be defined as

where wij denotes the edge weight between nodes vi and vj, W is the sum of all edge weights in the graph.

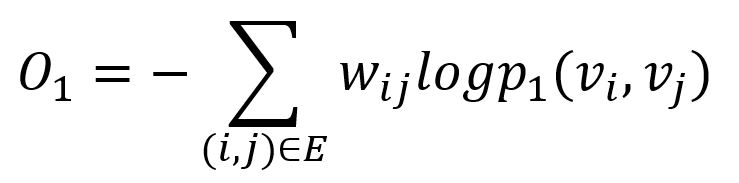

The KL-divergence is adopted to measure the difference between two distributions:

This serves as the objective function that needs to be minimized during training when preserving the first-order proximity.

LINE with Second-order Proximity

To model the second-order proximity, LINE defines two roles for each node - one as the node itself, another as "context" for other nodes (this concept originates from the Skip-gram model). Accordingly, two vector representations are introduced for each node.

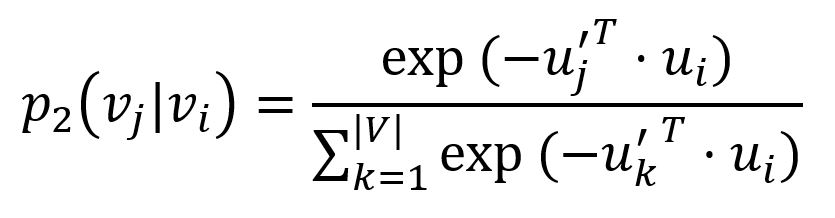

For each edge (i,j)∈E, LINE defines the probability of "context" vj be observed by node vi as

where u'j is the representation of node vj when it is regarded as the "context". Importantly, the denominator involves the whole "context" in the graph.

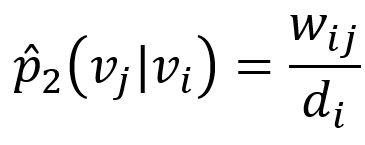

The corresponding empirical probability can be defined as

where wij is weight of edge (i,j), di is the weighted degree of node vi.

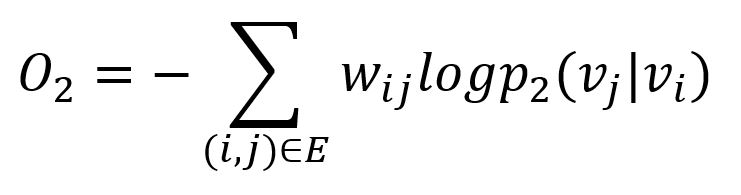

Similarly, the KL-divergence is adopted to measure the difference between two distributions:

This serves as the objective function that needs to be minimized during training when preserving the second-order proximity.

Model Optimization

Negative Sampling

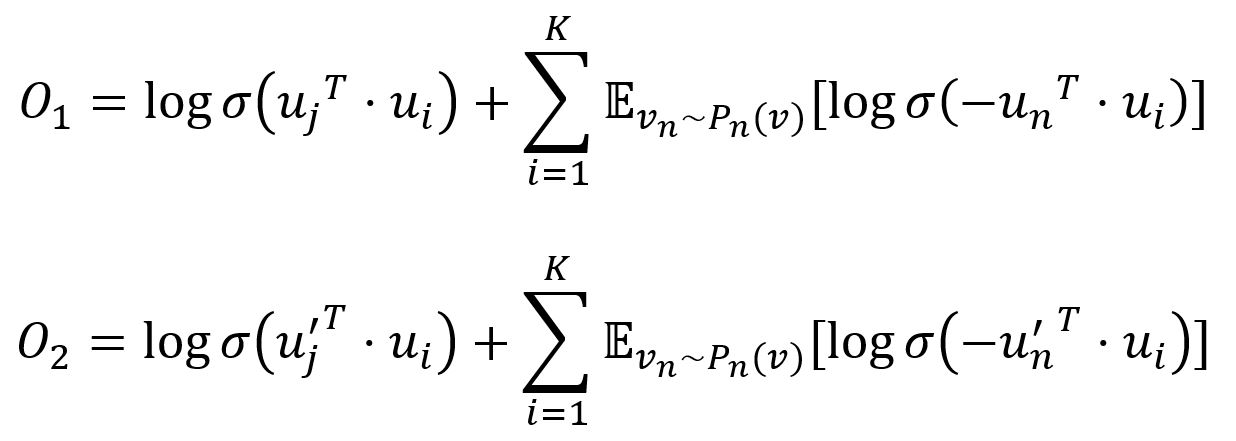

To improve the computation efficiency, LINE adopts the negative sampling approach which samples multiple negative edges according to some noisy distribution for each edge (i,j). Specifically, the two objective functions are adjusted as:

where σ is the sigmoid function, K is the number of negative edges drawn from the noise distribution Pn(v) ∝ dv3/4, dv is the weighted degree of node v.

Edge-Sampling

Since the edge weights are included in both objectives, these weights will be multiplied into gradients, resulting in the explosion of the gradients and thus compromise the performance. To address this, LINE samples the edges with the probabilities proportional to their weights, and then treat the sampled edges as binary edges for model updating.

Considerations

- The LINE algorithm ignores the direction of edges but calculates them as undirected edges.

Syntax

- Command:

algo(line) - Parameters:

Name |

Type |

Spec |

Default |

Optional |

Description |

|---|---|---|---|---|---|

| edge_schema_property | []@<schema>?.<property> |

Numeric type, must LTE | / | No | Edge property(-ies) to be used as edge weight(s), where the values of multiple properties are summed up |

| dimension | int | ≥2 | / | No | Dimensionality of the embeddings |

| train_total_num | int | ≥1 | / | No | Total number of training iterations |

| train_order | int | 1, 2 |

1 |

Yes | Type of proximity to preserve, 1 means first-order proximity, 2 means second-order proximity |

| learning_rate | float | (0,1) | / | No | Learning rate used initially for training the model, which decreases after each training iteration until reaches min_learning_rate |

| min_learning_rate | float | (0,learning_rate) |

/ | No | Minimum threshold for the learning rate as it is gradually reduced during the training |

| neg_num | int | ≥0 | / | No | Number of negative samples to produce for each positive sample, it is suggested to set between 0 to 10 |

| resolution | int | ≥1 | 1 |

Yes | The parameter used to enhance negative sampling efficiency; a higher value offers a better approximation to the original noise distribution; it is suggested to set as 10, 100, etc. |

| limit | int | ≥-1 | -1 |

Yes | Number of results to return, -1 to return all results |

Example

File Writeback

| Spec | Content |

|---|---|

| filename | _id,embedding_result |

algo(line).params({

dimension: 20,

train_total_num: 10,

train_order: 1,

learning_rate: 0.01,

min_learning_rate: 0.0001,

neg_number: 5,

resolution: 100,

limit: 100

}).write({

file:{

filename: 'embeddings'

}})

Property Writeback

| Spec | Content | Write to | Data Type |

|---|---|---|---|

| property | embedding_result |

Node Property | string |

algo(line).params({

edge_schema_property: '@branch.distance',

dimension: 20,

train_total_num: 10,

train_order: 1,

learning_rate: 0.01,

min_learning_rate: 0.0001,

neg_number: 5,

limit: 100

}).write({

db:{

property: 'vector'

}})

Direct Return

| Alias Ordinal | Type |

Description |

Columns |

|---|---|---|---|

| 0 | []perNode | Node and its embeddings | _uuid, embedding_result |

algo(line).params({

edge_schema_property: '@branch.distance',

dimension: 20,

train_total_num: 10,

train_order: 1,

learning_rate: 0.01,

min_learning_rate: 0.0001,

neg_number: 5,

limit: 100

}) as embeddings

return embeddings

Stream Return

| Alias Ordinal | Type |

Description |

Columns |

|---|---|---|---|

| 0 | []perNode | Node and its embeddings | _uuid, embedding_result |

algo(line).params({

edge_schema_property: '@branch.distance',

dimension: 20,

train_total_num: 10,

train_order: 2,

learning_rate: 0.01,

min_learning_rate: 0.0001,

neg_number: 5,

limit: 100

}).stream() as embeddings

return embeddings